What Happens If OpenAI Uses Google AI Chips Over Nvidia?

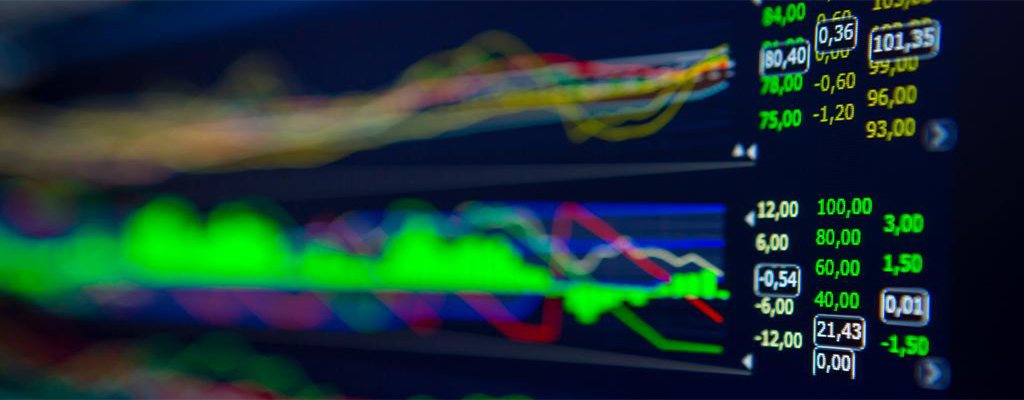

Stock markets quickly dismissed the risk for Nvidia (NVDA) after OpenAI reportedly considered using Alphabet’s (GOOG) (GOOGL) AI chips.

NVDA stock closed at nearly a $4 trillion market capitalization last week.

The Information reported that OpenAI started using Google TPUs (tensor processing units) to build products like ChatGPT. That would give the AI firm significant negotiating power against Nvidia. Unfortunately, Alphabet did not rent its top-end TPUs to OpenAI. This suggests that the search engine giant will keep those chips for its own Gemini AI.

AI Demand To Grow Exponentially

Tech investors should model for a near-unlimited demand for AI chips. OpenAI needs all the computing power it can get to train or run inference on its models. Moreover, deep research, video, image generation, and reasoning require lots of power.

OpenAI would need to continue using Nvidia’s Blackwell and Hopper models.

AMD Stock Jumped

Investors bet that Advanced Micro Devices (AMD) has an AI server that rivals that of Nvidia. MI 350X and MI 400 AI accelerators are another option for OpenAI. The MI400 series has a memory capacity of 432 GB and is based on the HBM4 standard. The higher memory bandwidth would offer a performance increase.